It's not news that healthcare is expensive. Here is a quick look into what is going on. Why this is going on is a different question that I'll come back to.

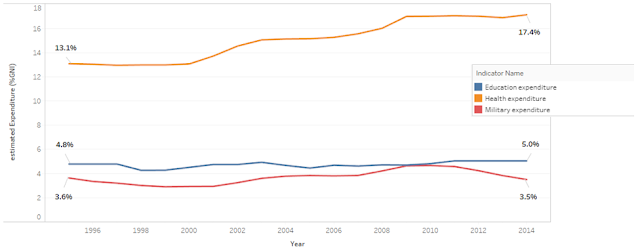

It's not news that healthcare is expensive. Here is a quick look into what is going on. Why this is going on is a different question that I'll come back to.I looked through World Bank WDI data (link) to visualize three spending areas that get significant attention:

- Education expenditures

- Healthcare expenditures

- Military spending.

This data is limited to the United States from 1995 - 2014. Figures 1 and 2 below show the results.

Education and military spending, as a percentage of gross national product (GNI), has remained flat for the last 20 years. Education has remained around 5% and military spending has remaining around 3.5% (with a temporary jump in 2009 to almost 5%).

However, healthcare spending has risen as a percentage of GNI over this same time by 4% (from around 13% to over 17%).

|

| Fig 1 - Expenditures as % of GNI (note: for the US, GNI and GDP are very close so I have used the two interchangeably) |

- Education increases from ~$364 billion to $904 billion

- Military increases from ~$271 billion to ~$627 billion

- Healthcare increases from ~$995 billion to over $3 trillion

|

| Fig 2- Expenditures in US$ |

The Centers for Medicare and Medicaid Services maintains a breakdown of healthcare spending (link). The summary of spending (PDF) shows that over 50% goes towards hospital and physician services.

|

| Fig 3 - Breakdown of healthcare spending in the US in 2015 |

- Hospital care spending increased by 5.6% in 2015 while prices only increased 0.9%. This means hospital spending was driven by increased usage and intensity of services.

- Physician services increased by 6.3% in 2015 while prices declined by 1.1%. This means that physician spending was driven by increased demand.

Taken together this suggests that most of the expenditures are occurring in areas that are being driven up by people needing more or more intensive (and expensive) care.

The interesting next question is: what is driving that increased need or intensity of care and how can those root causes be addressed?